Previously I have demonstrated how streaming data can be read and transformed in Apache Spark. This time I use Spark to persist that data in PostgreSQL.

Quick recap – Spark and JDBC

As mentioned in the post related to ActiveMQ, Spark and Bahir, Spark does not provide a JDBC sink out of the box. Therefore, I will have to use the foreach sink and implement an extension of the org.apache.spark.sql.ForeachWriter. It will take each individual data row and write it to PostgreSQL.

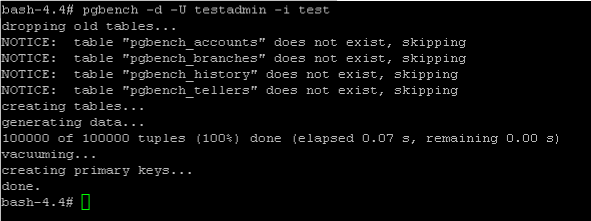

Preparing PostgreSQL

Even though I want to use PostgreSQL, I am actually