Just recently I needed to explore how I could get IBM Informix and EntitiyFrameworkCore to work together with an existing database, and therefore decided to document my findings in this simple step-by-step walk-through.

Prerequisites

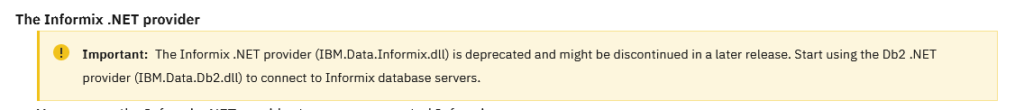

As mentioned here, the IBM® Data Server Provider for .NET IBM DB2® provider option is now the preferred Informix® provider for developing new applications.

Looking at the related site for the IBM Data Server Provider for .NET, it actually states that the former Informix specific provider (IBM.Data.Informix) is now deprecated.

Although this statement actually applies to the provider for .NET, … more